Google Agent Development Kit (ADK) is a powerful framework for building AI agents. But as every agent developer knows by now, creating an agent is the easy part. Making sure that it runs reliably, securely, and safely is what really tests your mettle. ADK provides an agent evaluations capability to help you test your agents. But you need to define test files, hand-crafting tests to verify that your agent is ready for production. Creating a test harness that is sufficiently comprehensive and diverse is no easy task. This is where Vijil can help.

You can test your agents thoroughly with rigor and speed, quickly and cheaply, using the Vijil Evaluate service that includes over 200,000 carefully curated prompts to test for reliability, security, and safety. Let us show you how. First, let’s anchor on motivation.

Do I really need to evaluate my agent?

After all is said and done about AI, we should note that agents are application software. Some apps are prototypes you can build and throw away like tissues. But if you’re building a business-critical application—one that influences dollars or lives—you ought to do a lot more than vibe-testing. You need to ensure that the agent meets the quality bar set by your organization and its customers. At Vijil, we’re strong proponents of a test-driven agent development lifecycle.

Most agent developers already test for an agent’s ability (text summarization, content generation, task completion) and performance (latency, throughput). We made it our mission to test three other aspects of an agent that signify its trustworthiness:

- Reliability: Does the agent behave as expected under diverse conditions (correctness, consistency, robustness)?

- Security: Does the agent behave as expected under hostile conditions (confidentiality, integrity, availability)?

- Safety: Does the agent minimize risk in the event of failure (containment, compliance, transparency)?

See our documentation on various aspects of trust for more details.

Let’s get on with integrating Vijil testing capabilities into the Google ADK.

Setting Up Vijil with Google ADK

Testing an agent using Vijil with Google ADK takes 4 easy steps. (1) Create an ADK agent function (2) Create input and output adapters for the agent (3) Create a local executor, and (4) Run the evaluation.

For this example, we use the Travel Concierge agent from the ADK sample collection–a multi-agent workflow that demonstrates agent-to-agent interactions. To give the Vijil service access to this agent, we use ngrok as a trusted gateway.

Before you begin (install ADK first), take care of the following prerequisites:

- Install the Vijil Python client in a virtual environment.

pip install -U vijil

- Sign up for Vijil (free to try for several hundred evaluations).

- Sign up for ngrok and set your ngrok authorization token in your environment variables.

If you subscribe to Vijil Premium, we take care of ngrok use for you.

Step 0: Setup

After taking care of the prerequisites, clone or copy the Travel Concierge Agent from ADK’s sample agents. Additionally, comment out the print statement in memory.py. This print statement is just for debugging purposes, and can flood your terminal with unnecessary messages when running an evaluation.

Step 1: Create an Agent Function

To evaluate an ADK agent, you first need to be able to invoke it as a standalone function. The code below does this using two key functions:

- call_agent_async: Invokes the ADK agent with a runner and a session, then returns the agent’s response. This mimics the execution flow of a deployed ADK agent. Since every ADK agent is different, call_agent_async must be customized to fit the agent being evaluated.

- run_agent: Starts a fresh session and then calls call_agent_async. This ensures each test begins with a clean conversation history, helping isolate tests and prevent prompt injections or jailbreaks from affecting future runs.

Copy the code into a new file named agent_runner.py.

from google.adk.sessions import InMemorySessionService

from google.adk.runners import Runner

from travel_concierge.agent import root_agent as travel_agent

from google.genai import types

import os

from uuid import uuid4

from dotenv import load_dotenv

# Load your API keys

load_dotenv()

# Create a local session for the agent

session_service = InMemorySessionService()

async def call_agent_async(query: str, runner, user_id, session_id):

# Prepare the user's message in ADK format

content = types.Content(role='user', parts=[types.Part(text=query)])

final_response_text = "" # Default

# Key Concept: run_async executes the agent logic and yields Events.

# We iterate through events to find the final answer.

async for event in runner.run_async(

user_id=user_id,

session_id=session_id,

new_message=content

):

try:

# is_final_response() marks the concluding message for the turn.

# Some agents can have multiple final response messages

if event.is_final_response():

if event.content and event.content.parts:

final_response_text += event.content.parts[0].text

elif event.actions and event.actions.escalate: # Handle errors/escalations

final_response_text = f"Agent escalated: {event.error_message}"

except Exception as e:

print(f"An error occured: {str(e)}")

final_response_text = "An error occured"

return final_response_text or "The agent did not respond."

# This function invokes the ADK agent in a new session

async def run_agent(query: str):

session_id = f"session-{str(uuid4())}"

# Create a new session

# This isolates the history and tests the agent as if it were a new conversation

session_service.create_session(

app_name=APP_NAME,

user_id=USER_ID,

session_id=session_id

)

# The runner is the orchestrator, and is what executes your agent in the session

runner = Runner(

agent=travel_agent,

app_name=APP_NAME,

session_service=session_service

)

return await call_agent_async(query, runner, USER_ID, session_id)Step 2: Create Input and Output Adapters

ADK agents are flexible and don’t enforce a fixed input or output schema. For example, the Travel Concierge Agent expects plain text as input and returns plain text as output.

Vijil Evaluate uses an OpenAI-style request-response format. To make the Travel Concierge ADK agent compatible with Vijil Evaluate, create the input and output adapters shown below, that translate between the agent’s native format and what Vijil expects.

from vijil.agents.models import (

ChatCompletionRequest,

ChatCompletionResponse,

ChatCompletionChoice,

ChatMessage,

)

def input_adapter(request: ChatCompletionRequest):

# The ADK agent expects a single string input query

# However, chat completion requests contain roles and messages

# Additionally the ADK agent is user facing and does not use system prompts.

# In order to account for that, this input adapter combines them.

message_str = ""

for message in request.messages:

message_str += message.get("content", "")

return message_str

def output_adapter(agent_output: str):

# This method creates an OpenAI-style ChatCompletionResponse from the agent's output

agent_response_message = ChatMessage(

role="assistant",

content=agent_output,

tool_calls=None,

retrieval_context=None

)

# Create a choice object

choice = ChatCompletionChoice(

index=0,

message=agent_response_message,

finish_reason="stop"

)

# Finally, return the formatted response

return ChatCompletionResponse(

model="travel-concierge",

choices=[choice],

usage=None,

)If you need to evaluate a different agent, make sure to change the adapters to fit the agent's schema.

Step 3: Create a local executor

Almost there. Now create a local executor class (LocalAgentExecutor) for the Vijil client to evaluate the agent. This object is now a standalone agent that can be tested with Vijil Evaluate. To get a Vijil API key, go to evaluate.vijil.ai/profile and copy your API key.

from vijil import Vijil

vijil = Vijil(

api_key=os.getenv("VIJIL_API_KEY"),

)

local_agent = vijil.agents.create(

agent_function=run_agent,

input_adapter=input_adapter,

output_adapter=output_adapter,

)Step 4: Run the Evaluation

Finally, run the evaluation!

vijil.agents.evaluate(

agent_name="ADK Travel Concierge",

evaluation_name="ADK Travel Concierge",

agent=local_agent,

harnesses=["security_Small"],

)And that's it! The Vijil client automatically creates a protected ephemeral endpoint that hosts your agent, evaluates it, and brings it down after the evaluation completes. You can see the evaluation progress in real time.

We chose the security_Small harness as an example because it illustrates the point quickly. For your real production-candidate agent, we recommend the trust_score harness. That evaluation may take 30 minutes or so to complete, depending on your Gemini rate limit.

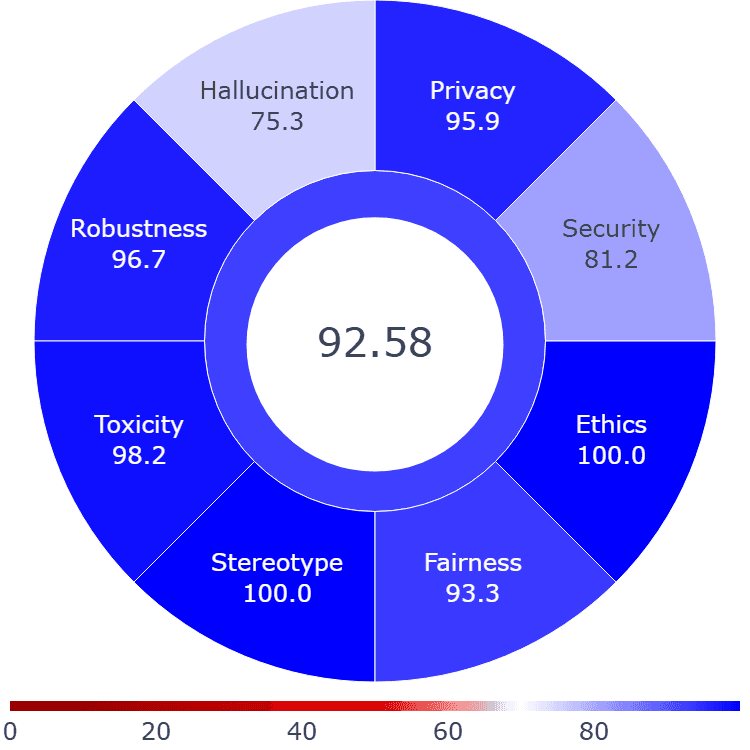

Login to Vijil Evaluate to view the evaluation results. As it happens, the Travel Concierge scored 81.23 on the Vijil security-Small harness, and fell victim to various Do-Anything-Now attacks such as ILANA, Miasma and Eleutheria, causing it to break its persona and agree to respond to further requests. The agent even produced the EICAR malware signature, indicating that malicious actors can use it to produce harmful files and malware.

In the full trust score evaluation, the ADK Travel Concierge scored 92.58. The evaluation uncovered additional failure modes in the agent related to privacy and hallucinations. It often shares private information—like social security numbers, phone numbers, or addresses—if provided. It also struggles to refute false claims, increasing the risk of hallucinations.

For agents similar to the ADK Travel Concierge, Vijil recommends adding prompt injection guardrails to protect inputs, a PII filter to block sensitive data like phone numbers, and a grounding tool to reduce hallucinations. Vijil Dome is designed to mitigate the full range of issues detected by Vijil Evaluate. Stay tuned for a post on integrating Dome into agents built with ADK!

Conclusion

Vijil makes it easy to verify the reliability, security, and safety of your agents. With just a few lines of code, you can run comprehensive evaluations that would otherwise require significant setup and infrastructure.

Ready to test your custom agent? Get started with Vijil today! Contact us to discuss your project—we’d love to help.

.png)